One of the things my team at StackOverflow does is maintain the CI/CD

system which builds all the software we use and produce. This includes

the Stack Exchange Android App.

Automating the CI/CD workflow for Android apps is a PITA. The process is full of trips and traps. Here are some notes I made recently.

First, [this is the paragraph where I explain why CI/CD is important. But I'm not going to write it because you should know how important it is already. Plus, Google definitely knows already. That is why the need to write this blog post is so frustrating.]

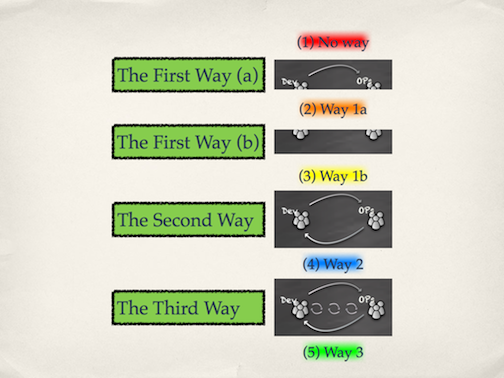

And therefore, there are two important things that vendors should provide that make CI/CD easy for developers:

- Rule 1: Builds should work from the command line on a multi-user system.

- Builds must work from a script, with no UI popping up. A CI system only has stdin/stdout/sterr.

- Multiuser systems protect files that shouldn't be modified from being modified.

- The build process should not rely on the internet. If it must download a file during the build, then we can't do builds if that resource disappears.

- Rule 2: The build environment should be reproducible in an automated fashion.

- We must be able to create the build environment on a VM, tear it down, and built it back up again. We might do this to create dozens (or hundreds) of build machines, or we might delete the build VM between builds.

- This process should not require user interaction.

- It should be possible to automate this process, in a language such as Puppet or Chef. The steps should be idempotent.

- This process should not rely on any external systems.

Android builds can be done from the command line. Hw, but the process itself updates files in the build area. Creating the build environment simply can not be automated, without repackaging all of the files (something I'm not willing to do).

Here are my notes from creating a CI/CD system using TeamCity (a commercial product comparable to Jenkins) for the StackOverflow mobile developers:

Step 1. Install Java 8

The manual way:

CentOS has no pre-packaged Oracle Java 8 package. Instead, you must download it and install it manually.

Method 1: Download it from the Oracle web site.

Pick the latest release, 8uXXX where XXX is a release number. (Be sure to pick "Linux x64" and not "Linux x86").

Method 2: Use the above web site to figure out the URL, then use this code to automate the downloading: (H/T to this SO post)

# cd /root

# wget --no-cookies --no-check-certificate --header \

"Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" \

"http://download.oracle.com/otn-pub/java/jdk/8u102-b14/jdk-8u102-linux-x64.rpm"

Dear Oracle: I know you employ more lawyers than engineers, but FFS please just make it possible to download that package with a simple curl or wget. Oh, and the fact that the certificate is invalid means that if this did come to a lawsuit, people would just claim that a MITM attack forged their agreement to the licence.

Install the package:

# yum localinstall jdk-8u102-linux-x64.rpm

...and make a symlink so that our CI system can specify JAVA8_HOME=/usr/java and not have to update every individual configuration.

# ln -sf /usr/java/jdk1.8.0_102 /usr/java/jdk

We could add this package to our YUM repo, but the benfit would be negligible plus whether or not the license permits this is questionable.

EVALUATION: This step violates Rule 2 above because the download process is manual. It would be better if Oracle provided a YUM repo. In the future I'll probably put it in our local YUM repo. I'm sure Oracle won't mind.

Step 2. Party like it's 2010.

The Android tools are compiled for 32-bit Linux. I'm not sure why.

I presume it is because they want to be friendly to the few developers

out there that still do their development on 32-bit Linux systems.

However, I have a few other theories: (a) The Android team has

developed a time machine that lets them travel back to 2010 because

I happen to know for a fact that Google moved to 64-bit Linux internally

around 2011; they created teams of people to find and eliminate any

32-bit Linux hosts. Therefore the only way the Android team could

actually still be developing on 32-bit Linux is if they either hidden

their machines from their employer, or they have a time machine.

(b) There is no "b". I can't imagine any other reason, and I'm

jealous of their time machine.

Therefore, we install some 32-bit libraries to gain backwards compatibility. We do this and pray that the other builds happening on this host won't get confused. Sigh. (This is one area where containers would be very useful.)

# yum install -y glibc.i686 zlib.i686 libstdc++.i686

EVALUATION: B-. Android should provide 64-bit binaries.

Step 3. Install the Android SDK

The SDK has a comand-line installer. The URL is obscured, making it difficult to automate this download. However you can find the current URL by reading this web page, then clicking on "Download Options", and then selecting Linux. The last time we did the the URL was: https://dl.google.com/android/android-sdk_r24.4.1-linux.tgz

You can install this in 1 line:

cd /usr/java && tar xzpvf /path/to/android-sdk_r24.4.1-linux.tgz

EVALUATION: Violates Rule 2 because it is not in a format that can easily be automated. It would be better to have this in a YUM repo. In the future I'll probably put this tarfile into an RPM with an install script that untars the file.

Step 4. Install/update the SDK modules.

Props to the Android SDK team for

making an installer that works from the command line. Sadly it

is difficult to figure out which modules should be installed.

Once you know the modules you need, specifying them on the command

line is "fun"... which is my polite way of saying "ugly."

First I asked the developers which modules they need installed.

They gave me a list, which was wrong. It wasn't their fault.

There's no history of what got installed. There's no command

that shows what is installed. So there was a lot of guess-work

and back-and-forth. However, we finally figured out which

modules were needed.

The command to list all modules is:

/usr/java/android-sdk/tools/android list sdk -a

The modules we happened to need are:

1- Android SDK Tools, revision 25.1.7

3- Android SDK Platform-tools, revision 24.0.1

4- Android SDK Build-tools, revision 24.0.1

6- Android SDK Build-tools, revision 23.0.3

7- Android SDK Build-tools, revision 23.0.2

9- Android SDK Build-tools, revision 23 (Obsolete)

19- Android SDK Build-tools, revision 19.1

29- SDK Platform Android 7.0, API 24, revision 2

30- SDK Platform Android 6.0, API 23, revision 3

39- SDK Platform Android 4.0, API 14, revision 4

141- Android Support Repository, revision 36

142- Android Support Library, revision 23.2.1 (Obsolete)

149- Google Repository, revision 32

If that list looks like it includes a lot of

redundant items, you are right. I don't know why we need 5 versions of the build tools (one which is marked "obsolete") and 3 version of the SDK. However I do know that if I remove any of those, our builds

break.

You can install these with this command:

/usr/java/android-sdk/tools/android update sdk \

--no-ui --all --filter 1,3,4,6,7,9,19,29,30,39,141,142,149

However there's a small problem with this. Those numbers

might be different as new packages are added and removed

from the repository.

Luckily there is a "name" for each module that (I hope) doesn't

change. However the names aren't shown unless you specify the -e option:

# /usr/java/android-sdk/tools/android list sdk -a -e

The output looks like:

Packages available for installation or update: 154

----------

id: 1 or "tools"

Type: Tool

Desc: Android SDK Tools, revision 25.1.7

----------

id: 2 or "tools-preview"

Type: Tool

Desc: Android SDK Tools, revision 25.2.2 rc1

...

...

Therefore a command that will always

install that set of modules would be:

/usr/java/android-sdk/tools/android update sdk --no-ui --all \

--filter tools,platform-tools,build-tools-24.0.1,\

build-tools-23.0.3,build-tools-23.0.2,build-tools-23.0.0,\

build-tools-19.1.0,android-24,android-23,android-14,

extra-android-m2repository,extra-android-support,\

extra-google-m2repository

Feature request: The name assigned to each module should be listed

in the regular listing (without the -e) or the normal listing

should end with a note: "For details, add the -e flag."

EVALUATION: Great! (a) Thank you for the command-line tool. The

docs could be a little bit better (I had to figure out the -e

trick) but I got this to work. (b) Sadly, I can't automate

this with Puppet/Chef because they have no way of knowing

if a module is already installed, therefore I can't

make an idempotent installer. Without that, the automation

would blindly re-install the modules every time it runs,

which is usually twice an hour. (c) I'd rather have these individual modules packaged as RPMs so I could just install the ones I need. (d) I'd appreciate a way

to list which modules are installed. (e) update should not re-install modules that are already installed, unless a --force flag is given. What are we, barbarians?

Step 4: Install license agreements

The software won't run unless you've agreed to the license.

According to Android's own website you do this by asking

a developer to do it on their machine, then copy those

files to the CI server. Yes. I laughed too.

EVALUATION: There's no way to automate this. In the future I will probably make a package out of these files so that we can install them

on any CI machine. I'm taking suggestions on what I should

call this package. I think android-sdk-lie-about-license-agreements.rpm might be a good name.

Step 5: Fix stupidity.

At this point we though we were done, but the app build

process was still breaking. Sigh. I'll save you the long

story, but basically we discovered that the build tools

want to be able to write to /usr/java/android-sdk/extras

It isn't clear if they need to be able to create files

in that directory or write within the subdirectories. Fuck it.

I don't have time for this shit. I just did:

chmod 0775 /usr/java/android-sdk/extras

chown $BUILDUSER /usr/java/android-sdk

chown -R $BUILDUSER /usr/java/android-sdk/extras

("$BUILDUSER" is the username that does the compiles. In our case it is teamcity because we use TeamCity.)

Maybe I'll use my copious spare time some day to figure out

if the -R is needed. I mean... what sysadmin doesn't have

tons of spare time to do science experiments like that?

We're all just sitting around with nothing to do, right?

In the meanwhile, -R works so I'm not touching it.

EVALUATION: OMG. Please fix this, Android folks! Builds should

not modify themselves! At least document what needs to be

writable!

Step 6: All done!

At this point the CI system started working.

Some of the steps I automated via Puppet, the rest

I documented

in a wiki page. In the future when we build additional

CI hosts Puppet will do the easy stuff and we'll manually

do the rest.

I don't like having manual steps but at our scale that

is sufficient. At least the process is repeatable now.

If I had to build

dozens of machines, I'd wrap all of this up into RPMs and

deploy them. However then the next time Android

produces a new release, I'd have to do a lot of work

wrapping the new files in an RPM, testing them, and so on.

That's enough effort that it should be in a CI system.

If you find that you need a CI system to build the CI

system, you know your tools weren't designed with automation

in mind.

Hopefully this blog post will help others going through this

process.

If I have missed steps, or if I've missed ways of simplifying

this, please post in the comments!

P.S. Dear Android team: I love you folks. I think Android is awesome

and I love that you name your releases after desserts (though I

was disappointed that "L" wasn't Limoncello.... but that's

just me being selfish.). I hope you take my snark in good humor.

I am a sysadmin that wants to support his developers as best he

can and fixing this problems with the Android SDK would really

help. Then we can make the most awesome Android apps ever.... which

is what we all want. Thanks!

One of the most anticipated DevOps books in years is about to start shipping!

One of the most anticipated DevOps books in years is about to start shipping!