*Lately there has been a renewed debate over the use of encrypted communication. Terrorists could be using encryption to hide their communication. Everyone knows this. The problem is that encryption is required for ecommerce and just about everything on the web.

Should encryption be banned? regulated? controlled?

Lately there have been a number of proposals, good and bad, for how to deal with this. Luckily I have a solution that solves all the problems!

My solution: (which is obvious and solves all problems)

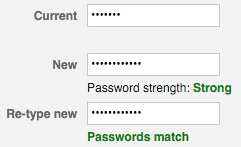

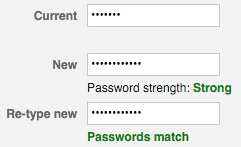

My solution is quite simple: Every time a website asks you to create or change a password, it would send a copy to the government. The government would protect this password database from bad people and promise only to use it when they really really really really really need to. Everyone can still use encryption, but if law enforcement needs access to our data, they can access it.

My solution is quite simple: Every time a website asks you to create or change a password, it would send a copy to the government. The government would protect this password database from bad people and promise only to use it when they really really really really really need to. Everyone can still use encryption, but if law enforcement needs access to our data, they can access it.

FAQ:

I've received a number of questions about my proposal. Here are my replies:

Q: Tom, which government?

Duh. THE government.

Q: Tom, what about websites outside the U.S.?

Ha! Silly boy. The internet doesn't exist outside of the U.S. Does it? Ok, I guess we need a plan in case countries figure out how to make webpages.

For example if someone in Geneva had the nerve to create a website, they'd just turn the passwords over to their government who would have an arrangement with the U.S. government to share passwords. This would work because all governments agree about what constitutes "terrorism", "due process", and "jurisprudence". Alternatively these Genevaians could just turn the passwords over to the U.S. directly. They trust us. Right?

Q: Tom, what if the government misuses these passwords?

That won't happen and let me explain why: There would be a policy that forbids that kind of thing.

If they have a written policy that employees may not view the passwords or use them inappropriately, it won't happen. I believe this because in past few years I've seen CEOs make statements like that and I always trust CEOs. I believe in capitalism because I'm no dirty commie hippy like yourself.

Q: Tom, how do we define when the government can use the database?

Dude. What part of "really really really really really" didn't you understand? They can't just really really really really need to use one of those passwords. They have to really really really really really (5 reallys!) need to use it!

Dude. What part of "really really really really really" didn't you understand? They can't just really really really really need to use one of those passwords. They have to really really really really really (5 reallys!) need to use it!

Q: Tom, what if someone steals the government's database?

Look, the government has top, top, people that could protect the database. It would be as simple as protecting the codes that launch nuclear missles.

Q: Tom, doesn't the OPM database leak prove this is unworkable?

What? Why would the government name a database after one of the best Danny Devito movies ever? Look, that movie was fictional. If you aren't going to take this debate seriously, stay out of it. Ok?

Q: Tom, wouldn't this encourage terrorists to make their own online systems?

Dude, you aren't paying attention. They'd be required to turn their passwords over to the government just like everyone else! If they don't, we know they are terrorists!

Conclusion:

Hi. Thank you for reading this far.

Obviously the above proposal is not something I support. It is a analogy to help you understand that the FBI and other law enforcement

organizations are proposing. When you hear about "law enforcement backdoor" legislation or requiring that phones be "court unlockable" this is what they mean.

The proposed plans aren't about passwords but "encryption keys". Encryption keys are "the technology behind the technology" that enables passwords to be transmitted across the internet securely. If you have a company's encryption keys you can, essentially, wiretap the company and decode all their private communication.

Under the proposal, every device would have a password (or key) that could be used to gain access to the encryption keys. The government would promise not to use the password (key) unless they had a warrant. We'd just have to hope that nobody steals their list of passwords.

Obviously neither of these proposals are workable.

This debate is not new. 20 years ago FBI and NSA officials went to the IETF meetings (where the Internet protocols are ratified) and proposed these ideas. In 1993-1995 this debate was huge and nearly tore the IETF apart. Finally cooler heads prevailed and rejected the proposals. It turned out that the FBI's predictions were just scare tactics. None of their dire predictions came true. "Indeed, in 1992, the FBI's Advanced Telephony Unit warned that within three years Title III wiretaps would be useless: no more than 40% would be intelligible and in the worst case all might be rendered useless. The world did not "go dark." On the contrary, law enforcement has much better and more effective surveillance capabilities now than it did then." (citation)

We must reject these proposals just like we did in the early 1990s. Back then most American's didn't even know what "the internet" was. The proposals were rejected in the 1990s because of a few dedicated computer scientists. Today the call to reject these proposals should come from everyone: Sysadmins, moms and dads, old and young, regardless of political party or affiliation.

All the encryption lingo is overwhelmingly confusing and technical. Just remember that when you hear these proposals, all they're really saying is: The FBI/NSA want easy access to anything behind your password.

My solution is quite simple: Every time a website asks you to create or change a password, it would send a copy to the government. The government would protect this password database from bad people and promise only to use it when they really really really really really need to. Everyone can still use encryption, but if law enforcement needs access to our data, they can access it.

My solution is quite simple: Every time a website asks you to create or change a password, it would send a copy to the government. The government would protect this password database from bad people and promise only to use it when they really really really really really need to. Everyone can still use encryption, but if law enforcement needs access to our data, they can access it.